Best detail I think is that they door dashed Arby’s.

- 85 Posts

- 2.03K Comments

4·1 天前

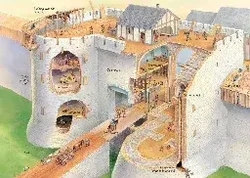

4·1 天前If you want a lasting legacy, build a big wall

1·10 天前

1·10 天前No, I know. I didn’t see any possible market for a product like this, but you shared you’re already doing what this product does, but manually. So I was wondering how much value you see here.

Don’t know if it changed since you commented, but the article I read included a bunch more than that

1·10 天前

1·10 天前Would you consider spending $600 plus $7/month for this? (Assuming it was actually secure not like this one)

5·11 天前

5·11 天前What could they possibly tell me about my health by visually inspecting my shit? I see the website mentions detecting blood, but pretty sure I can do that too…

I expect some of it’s true, but most of it sounds made up. Has a where’s Waldo vibe. I’d be interested to read more sourced info about this.

2·13 天前

2·13 天前I responded to your other comment, but yes, I think you could set up an llm agent with a camera and microphone and then continuously provide sensory input for it to respond to. (In the same way I’m continuously receiving input from my “camera” and “microphones” as long as I’m awake)

4·13 天前

4·13 天前I’m just a person interested in / reading about the subject so I could be mistaken about details, but:

When we train an LLM we’re trying to mimic the way neurons work. Training is the really resource intensive part. Right now companies will train a model, then use it for 6-12 months or whatever before releasing a new version.

When you and I have a “conversation” with chatgpt, it’s always with that base model, it’s not actively learning from the conversation, in the sense that new neural pathways are being created. What’s actually happening is a prompt that looks like this is submitted: "{{openai crafted preliminary prompt}} + “Abe: Hello I’m Abe”.

Then it replies, and the next thing I type gets submitted like this: "{{openai crafted preliminary prompt}} + "Abe: Hello I’m Abe + {{agent response}} + “Abe: Good to meet you computer friend!”

And so on. Each time, you’re only talking to that base level llm model, but feeding it the history of the conversation at the same time as your new prompt.

You’re right to point out that now they’ve got the agents self-creating summaries of the conversation to allow them to “remember” more. But if we’re trying to argue for consciousness in the way we think of it with animals, not even arguing for humans yet, then I think the ability to actively synthesize experiences into the self is a requirement.

A dog remembers when it found food in a certain place on its walk or if it got stabbed by a porcupine and will change its future behavior in response.

Again I’m not an expert, but I expect there’s a way to incorporate this type of learning in nearish real time, but besides the technical work of figuring it out, doing so wouldn’t be very cost effective compared to the way they’re doing it now.

3·13 天前

3·13 天前Yeah, it seems like the major obstacles to saying an llm is conscious, at least in an animal sense, is 1) setting it up to continuously evaluate/generate responses even without a user prompt and 2) allowing that continuous analysis/response to be incorporated into the llm training.

The first one seems like it would be comparatively easy, get sufficient processing power and memory, then program it to evaluate and respond to all previous input once a second or whatever

The second one seems more challenging, as I understand it training an llm is very resource intensive. Right now when it “remembers” a conversation it’s just because we prime it by feeding every previous interaction before the most recent query when we hit submit.

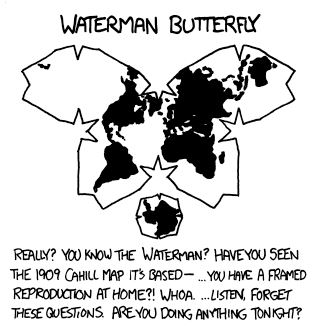

Every day? But not at the scale where I need to view the whole globe

I wasn’t aware of LibreOffice online. Interesting message about how you can use it but there’s a built in disclaimer that appears when you try to have more than 20 users that says “this isn’t good for that” https://www.libreoffice.org/download/libreoffice-online/

My dogs prefer lettuce, as long as it’s crispy

6·18 天前

6·18 天前For me I think it helps to think of error correction. When two computers are exchanging information it’s not just one way, like one machine just sends a continuous stream to the other and then you’re done. The information is broken up into pieces, and the receiving machine might say “I didn’t receive these packets can you resend.” And there are also things like checking a hash to make sure the copied file matches the original file.

How much more error correction do you think we should have in human conversation, when your idea of the “file transfer protocol” is different than the other participant? “I think you’re saying X, is that correct?” Even if you think you completely understand, a lot of times the answer is “no, actually… blah blah.”

You brought up the idea of neurodivergents providing more detail, which can be helpful. But even there, one person may have a different idea about which details are relevant, or what the intended goal of the conversation is.

Taking a step beyond that, I recognize that I am not a computer, and I’m prone to making errors. I may think I’m perfectly conveying all the necessary information, but experience has shown that’s not always true. Whether or not the problem is on my end or the other person’s, if I’m trying to accomplish a given objective, it’s in my personal interest to take extra steps to ensure there’s no misunderstanding.

That sounds exciting! Couple catty comments in here but I think you’re doing good work.

Super interesting!

Song titles from back then are funny. “The little lost child”

Also got me thinking how these songs were like the megahits of their time and I couldn’t hum a note or give the slightest description of the lyrics.

I remember seeing the video where they caught “the shooter” trying to hide amongst the other participants. Of course later it turns out the person they “caught” was Gambia, who had not shot at anyone. Good reminder that first impressions from an incident like this can be misleading, and if you don’t check in later you may continue to hold that false impression.