On the second part. That is only half true. Yes, there are LLMs out there that search the internet and summarize and reference some websites they find.

However, it is not rare that they add their own “info” to it, even though it’s not in the given source at all. If you use it to get sources and then read those instead, sure. But the output of the LLM itself should still be taken with a HUGE grain of salt and not be relied on at all if it’s critical, even if it puts a nice citation.

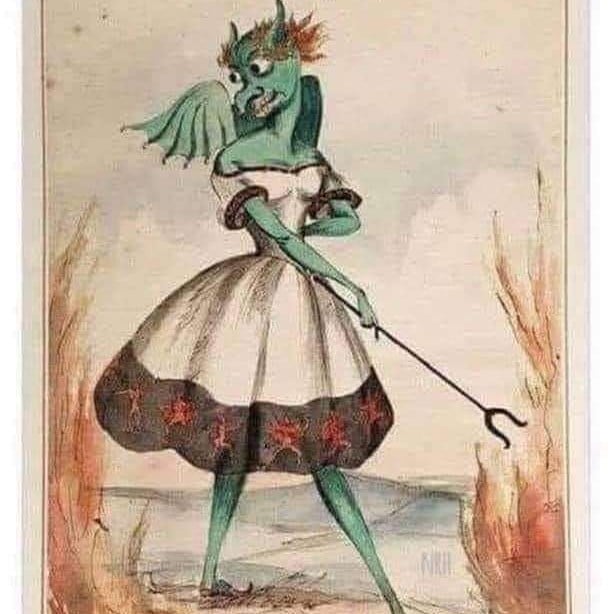

And also because Animate Dead, the spell the blurb in the meme is from, reads: